What are Node.js streams?

Modern web platforms like Node.js have a powerful feature called streams, but what exactly is a stream and why would you want to use them in your web/mobile application?

Streams are a powerful way to componentize code into specific focused modules and they help with scalability and realtime communication.

Version Information

- Author: Jeff Barczewski

- Published: July 25th, 2013

- Tags: nodejs, streams

- Node.js v0.10+ (latest stable is v0.10.13 as of this writing), but streams have generally been a part of Node.js from its early days

Definition and brief history

What is a stream?

A stream is a concept that was popularized in the early days of unix. It is an input/output (I/O) mechanism for transmitting data from one program to another. Each unix program has three Standard streams (input, output, error). Unix programs can be chained together by piping The output from one program directly into the input of another. This allows each program to be very specialized in what it does, but the combination of these simple programs can create very complex systems.

The streaming data is delivered in chunks which allows for efficient use of memory and realtime communication.

An example of using streams in a unix system might be to uncompress a gzipped tar file and piping the output to the grep command to search for lines matching a token:

# uncompress/untar file and search for 'bar'

tar zxf foo.tgz | grep barIn this unix streams example the program:

tarreads in a compressed file and uncompresses the results to its standard output (stdout)- the pipe

|moves the data from the output oftarinto the standard input (stdin) of thegrepprogram grepsearches the lines forbarand output only the lines that match.

Node.js streams

The Node.js streams API has evolved over time (especially with the release of v0.10) but the main concept has been in there from its very early days. Node.js streams docs

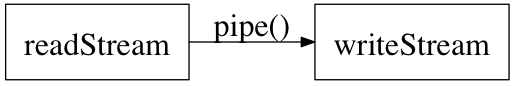

Here is a very simple example of reading a stream from file and piping to an HTTP response:

var fs = require('fs'); // file system

var http = require('http');

var server = http.createServer(function (req, res) {

// logic here to determine what file, etc

var rstream = fs.createReadStream('existFile');

rstream.pipe(res); // pipe file to response

});Why use them in building applications?

Smaller, focused modules

Decouple your programs or components into smaller more focused modules which do only one thing. Keep your code lean while still allowing complex process by chaining modules together.

Standard API for input/output which can even cross process boundaries

Chaining modules together requires a communication mechanism between these discreet modules. The Node.js streams API provides a standard mechanism for passing data in and out of a module, even across processes or network boundaries. This gives you added flexibility in deployment and the ability to adapt to future requirements or load.

Streams can allow us to use less memory and serve more concurrent users

Streams allow your programs to handle large amounts of scale. Today’s web platforms and applications have serious load put on them by millions of desktop and mobile users who make heavy use of rich media and realtime information.

Traditionally you would simply load the content fully into memory for manipulation and serving, but today a more pragmatic approach is required. Streams provide a scalability advantage by only holding a portion of each file in memory at a time for manipulation and delivery.

Realtime updates using streams and sockets

Streams and a bidirectional variation called a socket are very powerful in terms of delivering realtime continually updated content like stock market changes or social network updates. Applications open long running connections (sockets) to a service and updates are immediately broadcast as they happen rather than an application having to continually poll a server for new updates.

The Node.js event loop architecture really shines in being able to orchestrate all this asynchronous I/O without developer involvement.

Recapping reasons to use streams (and sockets)

- separation of concerns - smaller focused programs and modules

- standard API for input and output

- reduced memory use by only holding chunks in memory at a time

- long running realtime connections with quick temporal bursts of data

If you enjoyed this article join my mailing list and stay informed of all my upcoming Node.js articles, ebooks, screencasts, and other training opportunies.